Why neutral infrastructure is crucial for secure data exchange

By Guido Coenders

Analysts predict an enormous surge in data production in the years to come. As a result, there are widespread calls for businesses to monetize IoT data generated by the more than 100 billion IoT devices predicted to exist by 2030. At the same time, there are concerns that the use of internal data will not be sufficient to maintain a competitive advantage.

The key reason for this is that external data sources provide valuable input for algorithms which can create new business models. Against the background of these trends, the question arises as to how data can be shared in a secure and controlled manner between all parties involved.

Data marketplaces as a new model for value creation

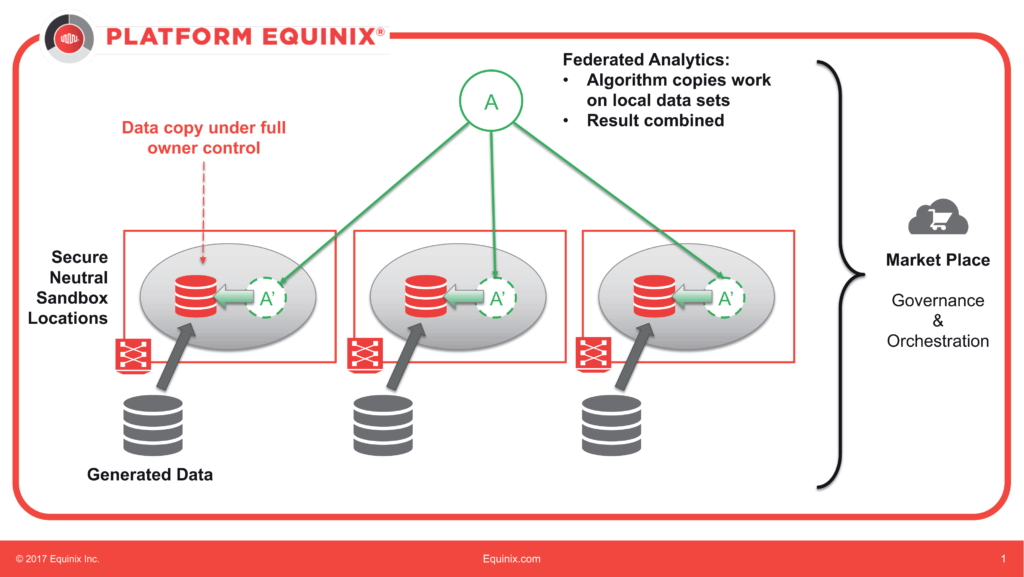

A potential solution for this challenge is the use of data marketplaces that define rules for data exchange and ensure that the rules agreed to between the parties are automatically executed and complied with. In order to further optimize the exchange mechanism of a data marketplace, so-called sandboxes can be used. These act as a neutral place where data and algorithms can meet. This solution allows data and intellectual property to remain under the control of the owner whilst interacting securely within the sandbox, out of sight of external actors.

In addition, federated analytics applications make it possible to run the same form of analytics on multiple datasets throughout different geographic locations and to combine the analysis into one consolidated result. This solves the need of moving large data sets over long distances and improves owner control.

Finally, neutral infrastructure is essential to provide a trusted and secure location for all parties, and thus enable sandbox and federated analytics capabilities. The neutral infrastructure in the form of the sandbox and federated analytics functions can be located in a public cloud or in a number of geographically spread neutral data centers.

Key points

The data marketplace as the key to successful AI implementation:

1. Big data analytics at the source of the data

2. Secure and controlled data access

3. Globally scalable and cost-efficient due to the neutral infrastructure platform

Field test in the aviation industry

The global data center company Equinix has implemented such an extended architecture in the case of data generated by airplane engines. The data was used to train an algorithm for predictive engine maintenance. For this purpose, data sets were hosted at three locations around the world and a sandbox was set up at the points where the respective algorithm and the data could „meet“.

“Neutral infrastructure ensures that both sandbox and federated analytics applications are able to run in a trusted and secure location for all parties.”

The described case shows under which conditions and in which cases the set-up can work with extended architectures: The data marketplace is able to govern transactions, create the sandbox, execute the analytics and finally control data exchange. The federated analytics solution delivers results of similar quality to central analytics. In addition, the amount of data moved across the world was less than one thousandth of the data moved in the case of a central set-up.

These promising results show that multiple challenges in the global exchange and analysis of big data can already be met today. Similar initiatives are not only being developed in the aviation industry, but also in the financial, healthcare, automotive and government sectors.

Contact the author

Aufmacherbild / Quelle / Lizenz

Bild von Gerd Altmann auf Pixabay